Learning Rate is my weekly newsletter for those who are curious about the world of AI and MLOps. Finally, we used deep-animator, a thin wrapper, to animate a statue.Īlthough there are some concerns about such technologies, it can have various applications and also show how easy it is nowadays to generate fake stories, raising awareness about it. and how to use it to obtain great results with no effort. I this story, we presented the work done by A. Also, you can use GPU acceleration with the -device cuda option. This will be saved into the same folder unless specified otherwise by the -dest option. On my CPU, it takes around five minutes to get the generated video. deep_animate įor example, if you have downloaded everything in the same folder, cd to that folder and run: deep_animate 00.png 00.mp4 conf.yml deep_animator_ Now, we are ready to have a statue mimic Leonardo DiCaprio! To get your results just run the following command. model_params: common_params: num_kp: 10 num_channels: 3 estimate_jacobian: True kp_detector_params: temperature: 0.1 block_expansion: 32 max_features: 1024 scale_factor: 0.25 num_blocks: 5 generator_params: block_expansion: 64 max_features: 512 num_down_blocks: 2 num_bottleneck_blocks: 6 estimate_occlusion_map: True dense_motion_params: block_expansion: 64 max_features: 1024 num_blocks: 5 scale_factor: 0.25 discriminator_params: scales: block_expansion: 32 max_features: 512 num_blocks: 4 Open a text editor, copy and paste the following lines and save it as conf.yml. A simple YAML configuration file is given below. To get some results quickly and test the performance of the algorithm you can use this source image and this driving video. A driving video best to download a video with a clearly visible face for start.A source image this could be for example a portrait.A YAML configuration file for our model.Thus, we need the weights to load a pre-trained model. The model weights of course, we do not want to train the model from scratch.

Run pip install deep-animator to install the library in your environment. To use it, first, you need to install the module. What I did is create a simple shell script, a thin wrapper, that utilizes the source code and can be used easily by everyone for quick experimentation. The source code of this paper is on GitHub. Figure 1 depicts the framework architecture. Next, the video generator takes as input the output of the motion detector and the source image and animates it according to the driving video it warps that source image in ways that resemble the driving video and inpatient the parts that are occluded. For example, let us look at the GIF below: president Trump drives the cast of Game of Thrones to talk and move like him.

The model tracks everything that is interesting in an animation: head movements, talking, eye tracking and even body action. a sequence of frames) and predicts how the object in the source image moves according to the motion depicted in these frames. At test time, the model takes as input a new source image and a driving video (e.g. Under the hood, they use a neural network trained to reconstruct a video, given a source frame (still image) and a latent representation of the motion in the video, which is learned during training. In this paper, the authors, Aliaksandr Siarohin, Stéphane Lathuilière, Sergey Tulyakov, Elisa Ricci and Nicu Sebe, present a novel way to animate a source image given a driving video, without any additional information or annotation about the object to animate.

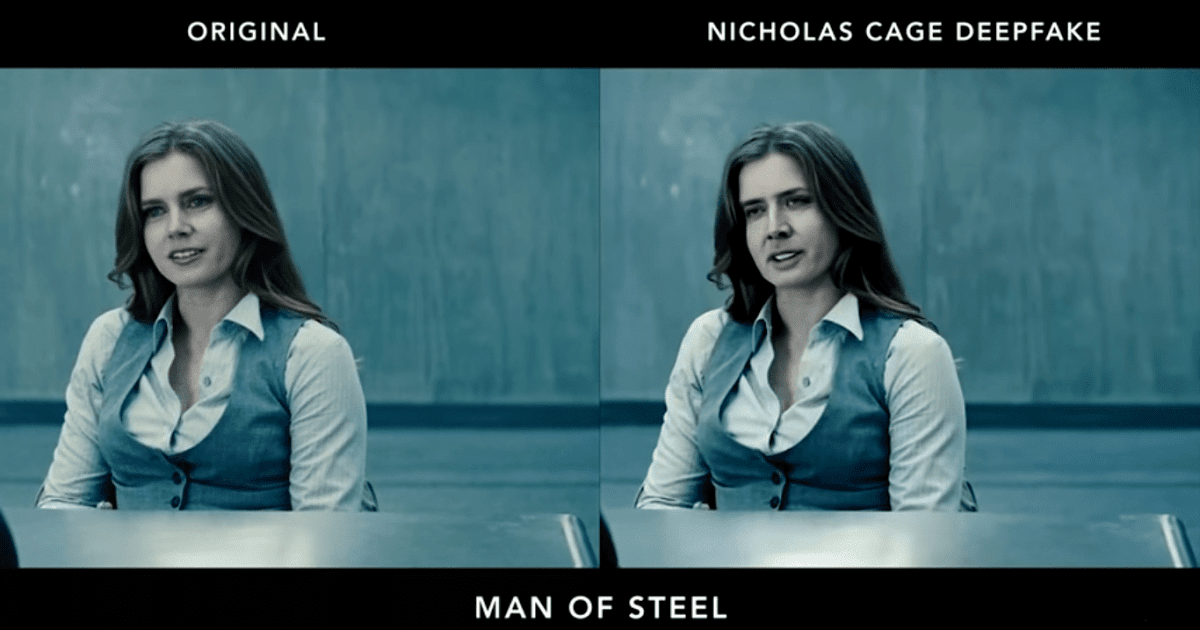

In this article, we talk about a new publication (2019), part of Advances in Neural Information Processing Systems 32 (NIPS 2019), called “ First Order Motion Model for Image Animation”. You’ll hear from me every Friday with updates and thoughts on the latest AI news, research, repos and books. With a source image and the right driving video, everything is possible. To this end, I transformed the source code of a relevant publication into a simple script, creating a thin wrapper that anyone can use to produce DeepFakes. In this story, we see how image animation technology is now ridiculously easy to use, and how you can animate almost anything you can think of. Image animation uses a video sequence to drive the motion of an object in a picture. Not that hard now that TikTok is taking over the world… Then all you need is a solo video of your favourite dancer performing some moves. Imagine having a full-body picture of yourself. Photo by Christian Gertenbach on Unsplashĭo you dance? Do you have a favourite dancer or performer that you want to see yourself copying their moves? Well, now you can!

0 kommentar(er)

0 kommentar(er)